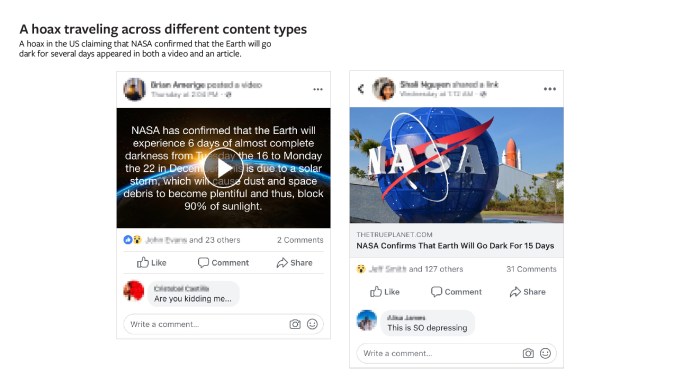

Sometimes fake news lives inside of Facebook as photos and videos designed to propel misinformation campaigns, instead of off-site on news articles that can generate their own ad revenue. To combat these politically rather than financially motivated meddlers, Facebook has to be able to detect fake news inside of images and the audio that accompanies video clips. Today its expanding its photo and video fact checking program from four countries to all 23 of its fact-checking partners in 17 countries.

“Many of our third-party fact-checking partners have expertise evaluating photos and videos and are trained in visual verification techniques, such as reverse image searching and analyzing image metadata, like when and where the photo or video was taken” says Facebook product manager Antonia Woodford. “As we get more ratings from fact-checkers on photos and videos, we will be able to improve the accuracy of our machine learning model.”

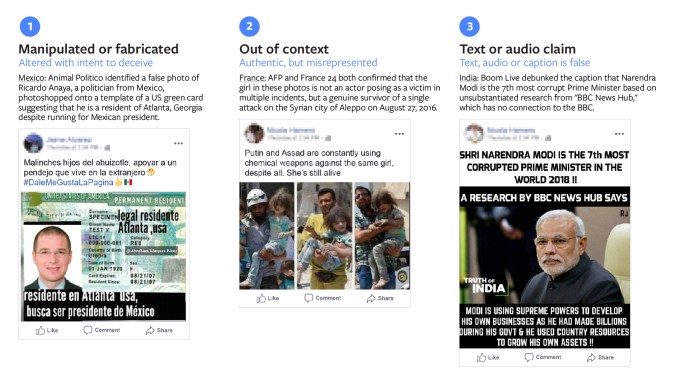

The goal is for Facebook to be able to automatically spot manipulated images, out of context images that don’t show what they say they do, or text and audio claims that are provably false.

In last night’s epic 3,260-word security manifesto, Facebook CEO Mark Zuckerberg explained that “The definition of success is that we stop cyberattacks and coordinated information operations before they can cause harm.” That means using AI to proactively hunt down false news rather than waiting for it to be flagged by users. For that, Facebook needs AI training data that will be produced as exhaust from its partners’ photo and video fact checking operations.

Facebook is developing technology tools to assist its fact checkers in this process. “we use optical character recognition (OCR) to extract text from photos and compare that text to headlines from fact-checkers’ articles. We are also working on new ways to detect if a photo or video has been manipulated” Woodford notes, referring to DeepFakes that use AI video editing software to make someone appear to say or do something they haven’t.

Join 10k+ tech and VC leaders for growth and connections at Disrupt 2025

Netflix, Box, a16z, ElevenLabs, Wayve, Hugging Face, Elad Gil, Vinod Khosla — just some of the 250+ heavy hitters leading 200+ sessions designed to deliver the insights that fuel startup growth and sharpen your edge. Don’t miss the 20th anniversary of TechCrunch, and a chance to learn from the top voices in tech. Grab your ticket before doors open to save up to $444.

Join 10k+ tech and VC leaders for growth and connections at Disrupt 2025

Netflix, Box, a16z, ElevenLabs, Wayve, Hugging Face, Elad Gil, Vinod Khosla — just some of the 250+ heavy hitters leading 200+ sessions designed to deliver the insights that fuel startup growth and sharpen your edge. Don’t miss a chance to learn from the top voices in tech. Grab your ticket before doors open to save up to $444.

Image memes were one of the most popular forms of disinformation used by the Russian IRA election interferers. The problem is that since they’re so easily re-shareable and don’t require people to leave Facebook to view them, they can get viral distribution from unsuspecting users who don’t realize they’ve become pawns in a disinformation campaign.

Facebook could potentially use the high level of technical resources necessary to build fake news meme-spotting AI as an argument for why Facebook shouldn’t be broken up. With Facebook, Messenger, Instagram, and WhatsApp combined, the company gains economies of scale when it comes to fighting the misinformation scourge.

10 critical points from Zuckerberg’s epic security manifesto