I see far more research articles than I could possibly write up. This column collects the most interesting of those papers and advances, along with notes on why they may prove important in the world of tech and startups.

In this week’s roundup: a prototype electronic nose, AI-assisted accessibility, ocean monitoring, surveying of economic conditions through aerial imagery and more.

Accessible speech via AI

People with disabilities that affect their voice, hearing or motor function must use alternative means to communicate, but those means tend to be too slow and cumbersome to speak at anywhere near average rates of speech. A new system could change that by context-sensitive prediction of keystrokes and phrases.

Someone who must type using gaze detection and an on-screen keyboard may only be able to produce between five and 20 words per minute — one every few seconds, a fraction of average speaking rates, which are generally over 100.

But like everyone else, these people reach for common phrases constantly depending on whom they are speaking to and the situation they’re in. For example, every morning such a person may have to laboriously type out “Good morning, Anne!” and “Yes, I’d like some coffee.” But later in the day, at work, the person may frequently ask or answer questions about lunch or a daily meeting.

The new system designed by Cambridge researchers takes in data like location, time of day, previous utterances and recognized faces in the surroundings to determine context, then makes live suggestions when the user starts typing. So typing “Yes” brings up the option to say “Yes to coffee” while at home. “What room … ” while at work and with a manager and coworker present brings up “What room is the meeting in today?” And so on.

Early tests show that this could eliminate half to nearly all keystrokes the person would normally make, and follow-up studies are planned. It’s hard to imagine a communication system for nonverbal people not having some system like this in a couple years, so expect further development and integration with existing artificial speech platforms.

Join 10k+ tech and VC leaders for growth and connections at Disrupt 2025

Netflix, Box, a16z, ElevenLabs, Wayve, Hugging Face, Elad Gil, Vinod Khosla — just some of the 250+ heavy hitters leading 200+ sessions designed to deliver the insights that fuel startup growth and sharpen your edge. Don’t miss the 20th anniversary of TechCrunch, and a chance to learn from the top voices in tech. Grab your ticket before doors open to save up to $444.

Join 10k+ tech and VC leaders for growth and connections at Disrupt 2025

Netflix, Box, a16z, ElevenLabs, Wayve, Hugging Face, Elad Gil, Vinod Khosla — just some of the 250+ heavy hitters leading 200+ sessions designed to deliver the insights that fuel startup growth and sharpen your edge. Don’t miss a chance to learn from the top voices in tech. Grab your ticket before doors open to save up to $444.

Deep (water) learning

What we don’t know about the ocean would fill … well, the ocean. Systematic testing of things like currents, acidity, salinity and other factors that affect aquaculture, ecology and shipping have been underway for decades, but the technology is aging. Two collaborations between MIT and Woods Hole should help these measurements happen faster and more easily without sacrificing data quality.

The first is what they call an “ocean profiler,” a sort of capsule that sinks hundreds of meters straight down in the water column and collects information as it goes. Understanding how nutrients, temperatures and other factors are moving vertically is a crucial part of oceanography, but existing devices to do this task require a ship to stop, drop the device, wait until it’s done, winch it back up, then move to a new spot — maybe only half a kilometer away — and do it again.

The new device, called the EcoCTD, can be dropped while the ship is moving, dropping straight while the winch pays out line at a rate that prevents it from being dragged. When it hits the 500-meter mark, it gets pulled in — and reaches the ship at the moment it has reached a new drop spot. It should make bulk collection of this important (and ever shifting) data much, much easier.

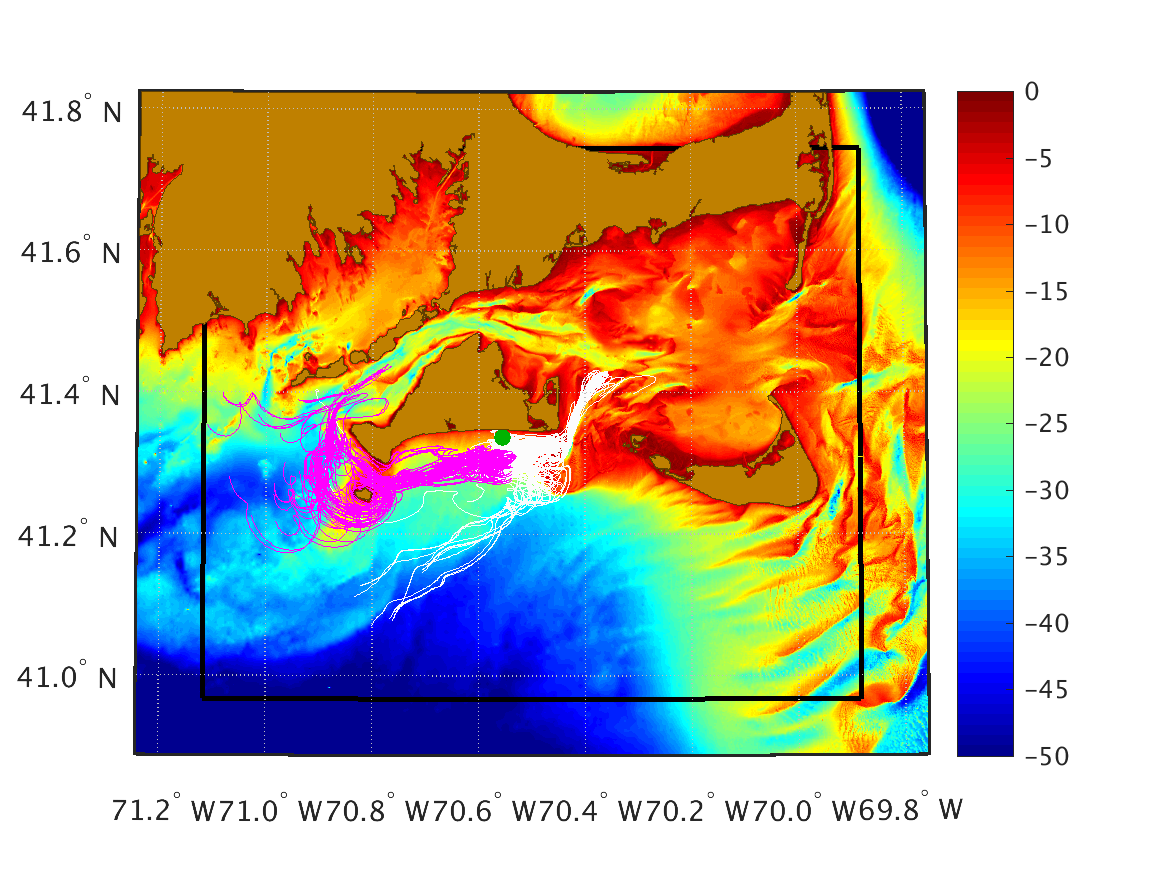

The other advance is a system that analyzes ocean conditions and predicts in real-time where drifting objects will end up. This is not so much for ship tracking (they generally have GPS) but for lost equipment, trash, discarded netting and even people. In the latter situation, it could help the Coast Guard prioritize areas to check first, improving a person’s chances of a timely rescue. These “traps” aren’t obvious and may be in counter-intuitive locations — but tests were repeatedly successful in guiding the team to target objects.

As researcher Thomas Peacock puts it:

People like the Coast Guard are constantly running simulations and models of what the ocean currents are doing at any particular time and they’re updating them with the best data that inform that model. Using this method, they can have knowledge right now of where the traps currently are, with the data they have available. So if there’s an accident in the last hour, they can immediately look and see where the sea traps are. That’s important for when there’s a limited time window in which they have to respond, in hopes of a successful outcome.

Both inventions have clear applications in maritime, so you can probably expect spinoffs or licensing deals to emerge in the near future.

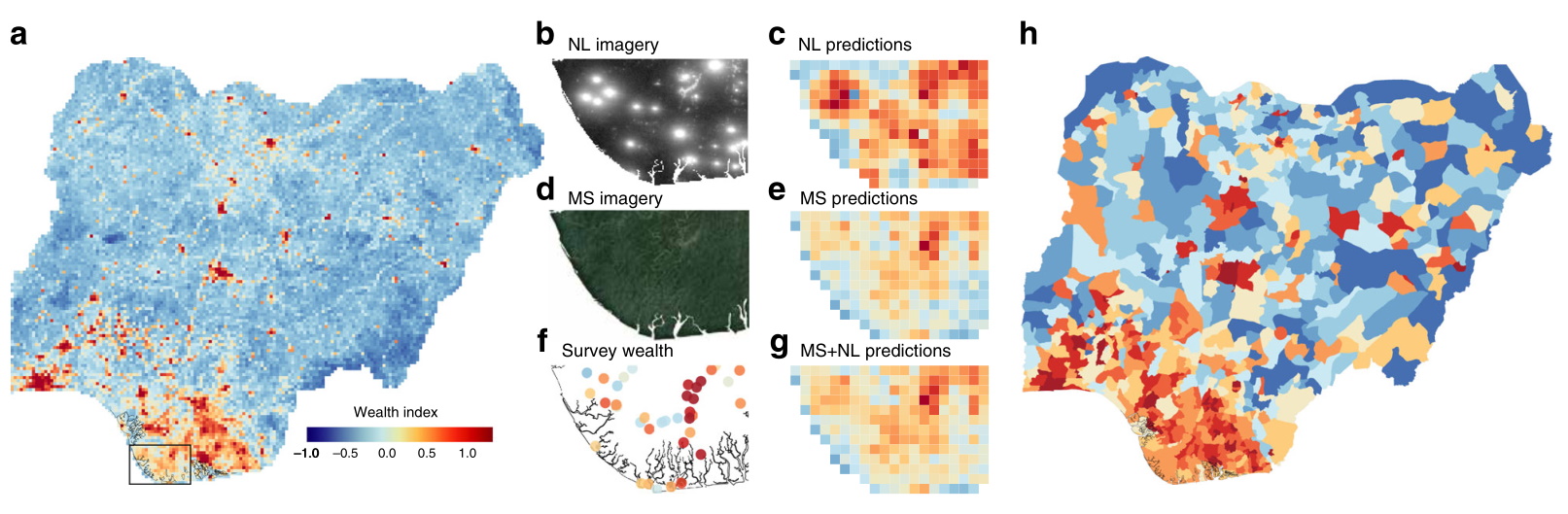

Tracking poverty and opportunity from orbit

Although urban Africa contains some of the fastest-growing and most-watched economies in the world, rural areas are often, like here in the States, left out of the party. Thousands of villages are at various stages of need, and tracking it for effective distribution of aid and infrastructure is difficult due to the distances and heterogeneity of cultures and approaches. (Any talk of Africa as a single entity, it must be said, is bound to be incredibly inaccurate, but many small communities face similar problems and have similar needs.)

For the last few years Stanford researchers have been looking for a way to observe and quantify economic status across huge swathes of African countries in order to help plan policies and distribute resources. But data is scarce, making measuring outcomes difficult.

“Amazingly, there hasn’t really been any good way to understand how poverty is changing at a local level in Africa. Censuses aren’t frequent enough, and door-to-door surveys rarely return to the same people,” said Stanford’s David Lobell.

But high-quality, regularly repeated orbital imagery of rural areas has provided a promising toolset to him and his colleagues. Daytime imagery shows the number and condition of roads, roofs, waterways and other structures, while nighttime images show lights, indicating the presence of electricity and other infrastructure.

Looking at images of 20,000 African villages going back to 2009, the team trained a machine learning system on the imagery and on ground truth data of poverty levels and other measures. Once up and running, the AI did a good job estimating levels of need and how they have changed over time, meaning it could be set loose on new imagery and give fast, accurate feedback on how an area is developing.

It doesn’t get at the “Why?” of course, but it helps put reliable numbers into circulation that simply didn’t exist or were difficult to come by before. That’s a foundation on which policy and intervention can be built. The team’s work was published in both Science and Nature — a rare double endorsement of the methods and potential effects.

Internet of peaches

Companies like BeeHero are taking the grunt work of apiculture and turning it into a live data dashboard — why not do the same for fruit? This prototype electronic nose developed by Brazilian researchers aims to do just that.

The toaster-sized box sucks in air and tests it for “volatile organic compounds,” the chemical signals that peaches give off in various stages of ripeness. Human experts can do this as well, but it requires them to be there in the fields or the fruit to be in a warehouse or lab — not ideal, explained project leader Sergio Luiz Stevan to IEEE Spectrum.

By letting an array of these devices sit throughout an orchard, growers can get real-time measurements of ripeness as different trees approach ideal harvesting time individually due to differences in soil, ventilation and other factors.

Right now the e-nose is still very much a lab prototype, but with high accuracy and immediate applications in the market (for peaches at first, but other crops that give off such signals later), you better believe the team is looking into commercialization. The work comes from the Federal University of Technology — Paraná and State University of Ponta Grossa.

Interstellar Parallax

This one doesn’t really have any business potential, but it’s too amazing to pass up. You may remember New Horizons, the probe that revolutionized our understanding of Pluto with its incredibly close fly-by and is currently on its way to the outer reaches of the solar system.

The New Horizons probe buzzes the most distant object ever encountered first thing tomorrow

Well, New Horizons has gone so far that it has achieved an amazing new milestone: For the first time, in images sent back to Earth, a star has changed position because of the difference in viewpoint.

This change in perspective is called parallax. Look at an object through your right eye, then through your left — that tiny change in position is part of how you can tell how far away it is. It’s the same with stars, except we never see them move: They’re so far away that even at the two far ends of Earth’s orbit they’re effectively in the same position. Like seeing a lighthouse in the distance then taking a single step to the right and looking again, we can’t see the difference.

But New Horizons can, and it’s not even subtle. Two of the nearest stars to us, Proxima Centauri and Wolf 359 have noticeably shifted in a pair of images, one taken from Earth and the other from New Horizons. Queen guitarist and astrophysicist Brian May enthused:

The latest New Horizons stereoscopic experiment breaks all records. These photographs of Proxima Centauri and Wolf 359 – stars that are well-known to amateur astronomers and science fiction aficionados alike — employ the largest distance between viewpoints ever achieved in 180 years of stereoscopy!

Go ahead and get excited about it. You can read more about this landmark image here.