Nearly a year ago, developers Seth Forsgren and Hayk Martiros released a hobby project called Riffusion that could generate music using not audio but images of audio. It sounds counterintuitive (no pun intended), but it worked — my colleague Devin Coldewey got the rundown here.

While their approach had its limitations, Riffusion netted Forsgren and Martiros a lot of attention — not exactly surprising given the curiosity (and controversy) surrounding AI-generated music tech. Millions of people tried Riffusion, according to Forsgren, and the platform was cited in research papers published out of Big Tech companies including Meta, Google and TikTok parent ByteDance.

Some of the attention came from investors as well, it seems.

This year, Forsgren and Martiros decided to commercialize Riffusion, which is now being advised by the musical duo The Chainsmokers and has closed a $4 million seed round led by Greycroft with participation from South Park Commons and Sky9.

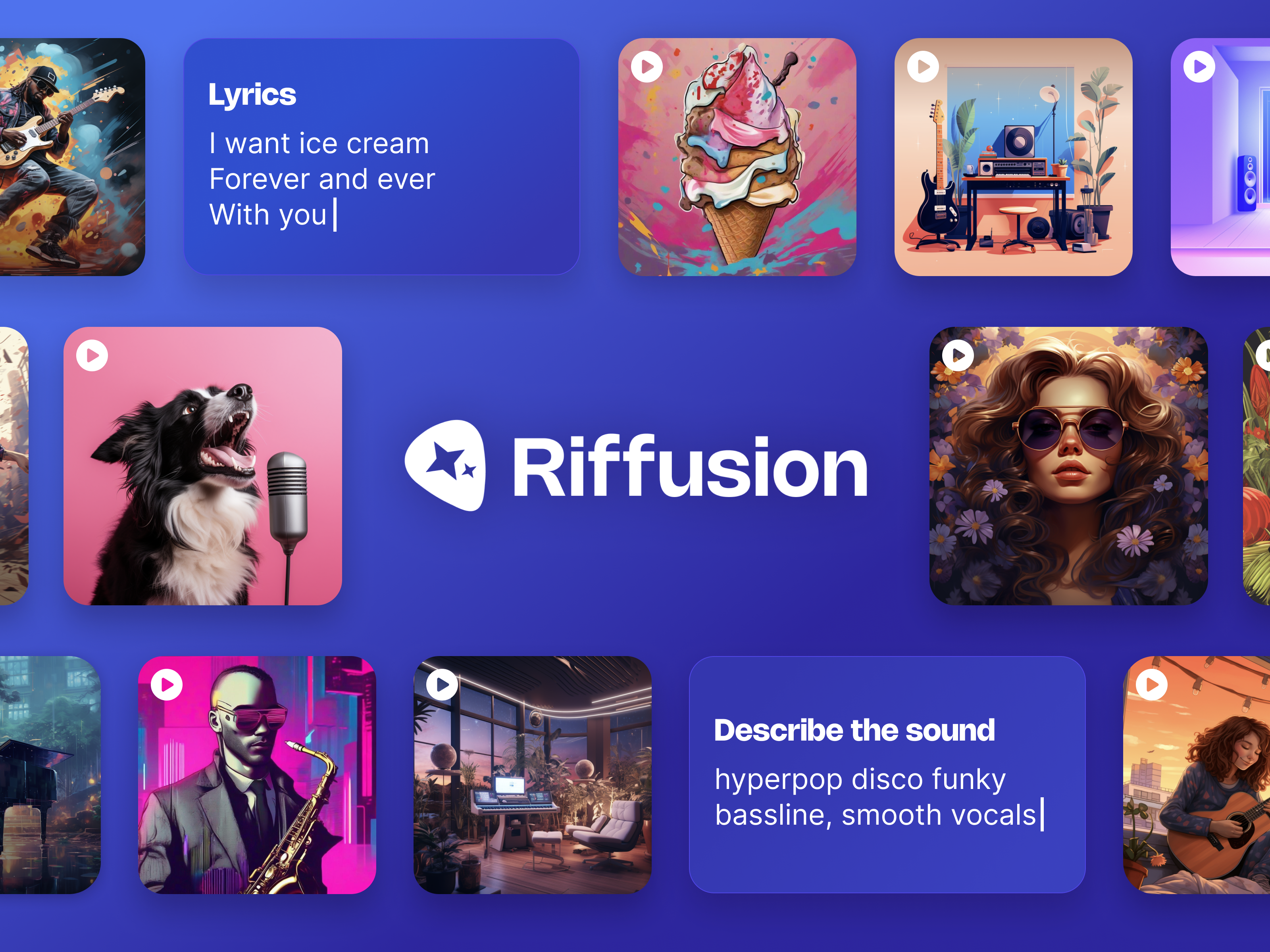

Riffusion is also launching a new, free-to-use app — an improved version of last year’s Riffusion — that allows users to describe lyrics and a musical style to generate “riffs” that can be shared publicly or with friends.

“[The new Riffusion] empowers anyone to create original music via short, shareable audio clips,” Forsgren told TechCrunch in an email interview. “Users simply describe the lyrics and a musical style, and our model generates riffs complete with singing and custom artwork in a few seconds. From inspiring musicians, to wishing your mom ‘good morning!,’ riffs are a new form of expression and communication that dramatically reduce the barrier to music creation.”

Martiros and Forsgren met at Princeton while undergrads, and have spent the last decade playing music together in an amateur band. Forsgren previously founded two venture-backed tech companies, Hardline and Yodel, while Martiros joined drone startup Skydio as one of its first employees.

Join 10k+ tech and VC leaders for growth and connections at Disrupt 2025

Netflix, Box, a16z, ElevenLabs, Wayve, Hugging Face, Elad Gil, Vinod Khosla — just some of the 250+ heavy hitters leading 200+ sessions designed to deliver the insights that fuel startup growth and sharpen your edge. Don’t miss the 20th anniversary of TechCrunch, and a chance to learn from the top voices in tech. Grab your ticket before doors open to save up to $444.

Join 10k+ tech and VC leaders for growth and connections at Disrupt 2025

Netflix, Box, a16z, ElevenLabs, Wayve, Hugging Face, Elad Gil, Vinod Khosla — just some of the 250+ heavy hitters leading 200+ sessions designed to deliver the insights that fuel startup growth and sharpen your edge. Don’t miss a chance to learn from the top voices in tech. Grab your ticket before doors open to save up to $444.

Forsgren says that he and Martiros were inspired to scale Riffusion by the potential they see in generative AI tools to connect people through creativity.

“The pandemic gave us all a lot more time at home — and led me to learn to play the piano,” Forsgren said. “Music has a great power to connect us in times of isolation. Generative AI is a new and rapidly changing space, and Riffusion aims to harness this technology to deliver a fun new instrument — one that empowers everyone to actively create music throughout their lives.”

The upgraded Riffusion is powered by an audio model that the Riffusion team — which is six people strong, including Forsgren and Martiros — trained from scratch. Like the model behind the original Riffusion, the new model’s fine-tuned on spectrograms, or visual representations of audio that show the amplitude of different frequencies over time.

Forsgren and Martiros made spectrograms of music and tagged the resulting images with the relevant terms, like “blues guitar,” “jazz piano” and so on. Feeding the model this collection “taught” it what certain sounds “look like” and how it might re-create or combine them given a text prompt (e.g. “lo-fi beat for the holidays,” “mambo but from Kenya,” “a folksy blues song from the Mississippi Delta,” etc.).

“Users describe musical qualities through natural language or even recording their own voice, as a method of prompting the model to generate unique outputs,” Forsgren explained. “We think the product will empower music producers and audio engineers to explore new ideas and get inspiration in a totally new way.”

Here’s a sample made using Riffusion’s ability to record a voice with the prompt “punk rock anthem, male vocals, energetic guitar and drums”:

Topics